Late last year, an organization called “Data for Black Lives” hosted a conference at MIT in Cambridge, Massachusetts. The goal of the event was to understand how algorithms are being used to reinforce inequality and perpetuate injustices, and to learn about how we can use algorithms to fight bias and promote civic engagement. I was listening to one speaker and she stated that “Algorithms are not object, fact, unbiased or neutral. They are opinions imbedded in code.”

In large part, I agree with the part of her statement about algorithms not being objective, but, I would modify that a little to say that they are not objective or unbiased just because they are math-based. Believing that an algorithm is completely objective because it has math at its core is a trend that is being referred to as “mathwashing.”

We must be conscious and deliberate about building objectivity and fairness into our algorithms. This is possible when we bring the affected/impacted community into the design and deployment process.

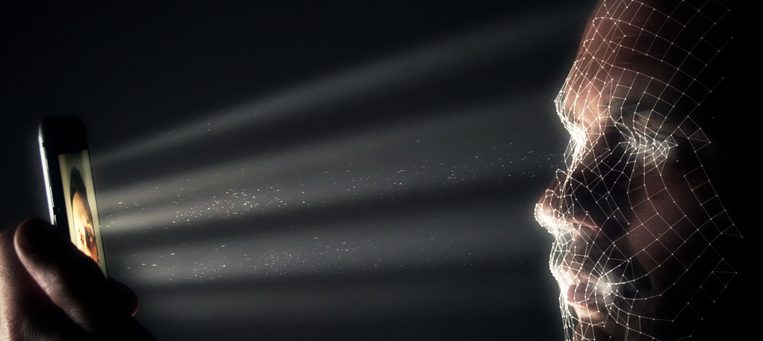

The Atlantic published an article which discussed studies that have shown some facial recognition software being used by public law enforcement agencies may have racial bias. There certainly was no clear proof that these biases were intentional, but they quite possibly may have been “introduced unintentionally at a number of points in the process of the design and the deployment of the system.”

With this in mind, and speaking specifically with regards to the public sector, there must be checks and balances in how we build and deploy algorithms. We must ensure that they perform how we intended: which is, for them to ensure the safety of, and provide quality and equitable service to the people we serve. As stated by Fred Benenson, Kickstarter’s former data chief, “Algorithm and data-driven products will always reflect the design choices of the humans who built them.” Therefore, algorithms should include the insight and input of the community at large.

Let me explain this point using a real world example of sorts. Let’s say, oh I don’t know, there is a nation in Africa called Wakanda. And let’s say that some really bad guys were trying to steal a precious metal from this nation. But, these bad guys were stopped by a team of superheroes. Unfortunately, during the conflict between the bad guys and superheroes, the downtown business district of Wakanda, which is a very large public marketplace where business people sell their goods from stalls, was demolished.

Because of this, Wakanda received a very large amount of money to rebuild the marketplace from donations obtained via the United Nations. Now the government of Wakanda must ensure that each vendor gets the appropriate amount of money and resources that they need in order to restart their businesses. To ensure that the process is swift, fair and equitable, they decide to build an algorithm that will inform the government’s leadership on how to prioritize who should get the help first and how much they should get.

We know that bias introduced in the creation of an algorithm and even the data flowing into it could inadvertently turn any program into a discriminator, so Wakanda wants to ensure that two things are happening during the creation of this system. The first is that input on requirements, constraints, workflow, process, performance and business logic are coming directly from the citizens who lost their businesses. Second is that the outcomes from the execution of the algorithm are shared immediately to the vendors for their information so they can respond with feedback. So when some monies are dispersed to a vendor this is then shared immediately with the community so that they can follow along with the process and give their thoughts and concerns if need be.

To show the importance of this type of 360-degree engagement with the community being targeted by an algorithm, let’s go back to the example about the facial recognition software. A study that was done by organizers of the team that tests the accuracy of facial recognition software for the National Institute of Standards and Technology (NIST) found that “algorithms developed in China, Japan and South Korea recognized East Asian faces far more readily than Caucasians. The reverse was true for algorithms developed in France, Germany and the United States, which were significantly better at recognizing Caucasian facial characteristics.”

This shows us that the environment in which an algorithm is created, including those who contribute to the development of that algorithm, can influence the accuracy and impact of the results.

As discussed at the Data for Black Lives conference, there is definitely some truth to the statement that algorithms are made up of someone’s opinion and experience. Therefore, we should ensure that the opinion and experience of people who will be impacted by the algorithm is captured in the design of the algorithm such that we can remove as much bias as humanly possible. Only then will we truly reap the full benefit of fairness in algorithms and subsequently, their ability to be a change agent for the public sector.

Amen Ra Mashariki is part of the GovLoop Featured Contributor program, where we feature articles by government voices from all across the country (and world!). To see more Featured Contributor posts, click here.

Thanks for sharing this, Amen. Great post and very thoughtful. Listening to those that are affected by the outcome, and making sure they are very much involved in the process, is a point that can never be understated, and appreciate the very timely Wakanda example.