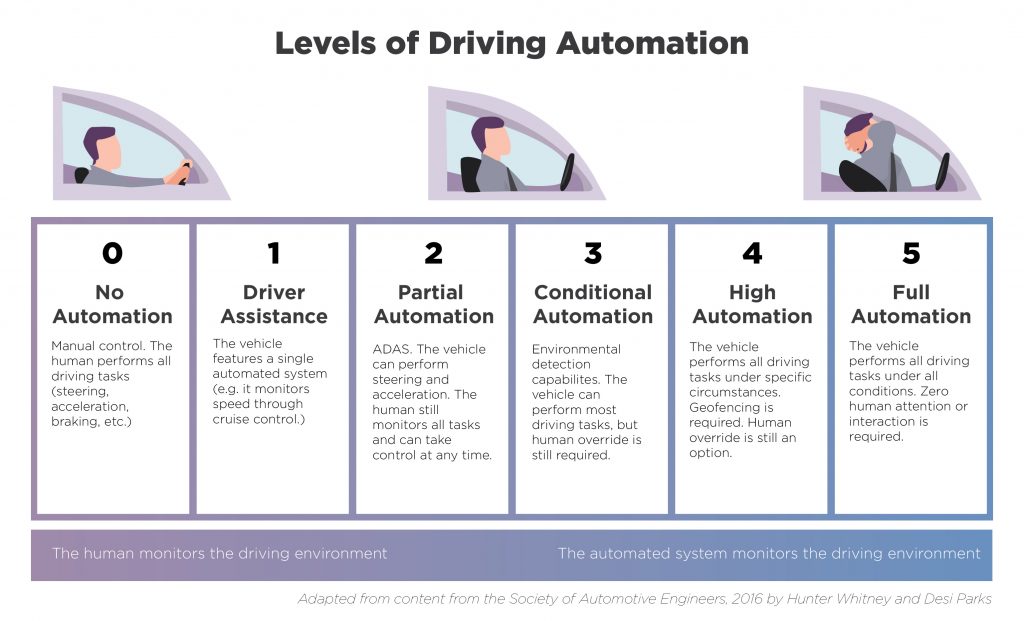

As artificial intelligence (AI) begins to play an increasingly central role in a wide range of government systems, the question of exactly where control and responsibility will land becomes more difficult to answer. In an age of autonomous vehicles, the phrase “who’s in the driver’s seat?” takes on a different meaning.

As the scale, speed and complexity of working with data outstrip our capacity to manage it, the need for tools like AI for both government and the private sector is undeniable. But as with any powerful tool, the ways AI is designed and deployed can lead to substantial benefits or great harm.

That’s where human-centered design (HCD) can play a vital role in tipping the balance toward the side of benefits and establishing effective new forms of trust and accountability. This article will discuss why HCD goals for making AI controllable, comprehensible and predictable are so important and how the U.S. government is uniquely positioned to be an exemplar of this approach.

Human-Centered AI: Framing and Outcomes

HCD has been largely embraced, albeit not always well understood, as a way to make products and services more useful, safe and appealing for the people who use them. Arguably, there is no more important place to apply HCD approaches than to AI, also referred to as HCAI. Although AI design decisions may not be visible, the way they are on a bottle cap that is easier for arthritic hands to open, or a useable website, the ultimate impact of HCAI from health clinics to a battlefield is more profound and far-reaching. HCAI offers opportunities to forward-looking government agency staffers to create real and beneficial digital transformations that help their customers and organizations.

HCD is a complex and nuanced topic, so for the moment, I’ll note a few main tenets. The primary is contained in the name – problem-solving approaches that consistently place human needs and objectives as the central focus of the design efforts. That’s not to say other considerations are unimportant, but they are in the service of human goals.

Another key element of HCD is working hard to properly frame both the problems and solutions. One common form of framing the discussion is the idea that human control versus automation is a zero-sum game, where increased automation necessarily means less human control. This may be less a truism than a failure of imagination that can lead to poorly designed systems. To Ben Shneiderman, a computer science professor at the University of Maryland and thought leader in HCAI, with the right framing, context, and design approaches, both human control and automation can increase as represented in this chart.

Even subtleties in describing AI and ML system design approaches can have a significant impact on the outcome. For example, the phrase “humans in the loop” which means people collaborating with an AI or ML system to help train and tune those systems can be problematic because it can bias the design mindset. To Shneiderman, “humans in the loop” places people in a lesser role. “The AI mentality is if we have to, we’ll put a human in the loop.” From his perspective, systems should be designed with humans at the center and enhanced with assistive technologies. Shneiderman’s alternative formulation of this is “Humans in the group, computers in the loop.”

HCAI from Healthcare to Defense

From civilian to defense agencies, Shneiderman believes if human-centered design is devoted to making AI “controllable, comprehensible, and predictable” there is a lot of room for improving operations and adding to the public good. These ingredients will lead to better products, services and outcomes overall. “AI is not in aircraft cockpits because the aviation community doesn’t trust it – it’s considered as too unpredictable,” he said.

One example where HCAI has the potential to amplify good outcomes is monitoring claims at the Centers for Medicare & Medicaid Services (CMS). Shneiderman recalls, “a few years ago, I downloaded an amazing spreadsheet from CMS, which displayed the claims from different clinics. A few of these clinics had sent in tens of thousands of claims, which was way beyond their capacity. The extreme number of claims was so large that CMS would benefit from the additional support of AI and ML tools to detect more subtle patterns of false claims related to specific procedures, thereby improving fraud prevention.”

He added that there are more subtle uses of human-centered AI:

- Giving physicians guidance about different kinds of medical treatments.

- Offering users of navigation systems multiple routes with estimated arrival times.

- Helping digital camera users by setting lighting and focus, while allowing users to compose their own photos.

- Giving information and recommendations to operators of factory, transportation, and hospital Control Rooms.

AI for the defense sector has had its share of controversy, but the military is an important arena for thinking about AI and the benefits and dangers of who or what is in control. An HCAI approach may look at the issues through a more focused lens, such as defensive versus offensive systems. From Shneiderman’s perspective, “defensive systems are better candidates for AI while for offensive systems, there should be more human intervention.”

He noted that the military is very strong on chain of command. “Admirably, there’s a big focus on responsibility and accountability,” he said. Additionally, they are also diligent about after-action reports. There are good practices in the military community, he says, and that should help going forward. “There’s the hope that AI is going take the right actions, but if it’s unpredictable, then that’s a serious problem,” Shneiderman said and added, “If you don’t know what actions your AI teammate is going to take next, then shut it down.”

Trust and Guard Rails

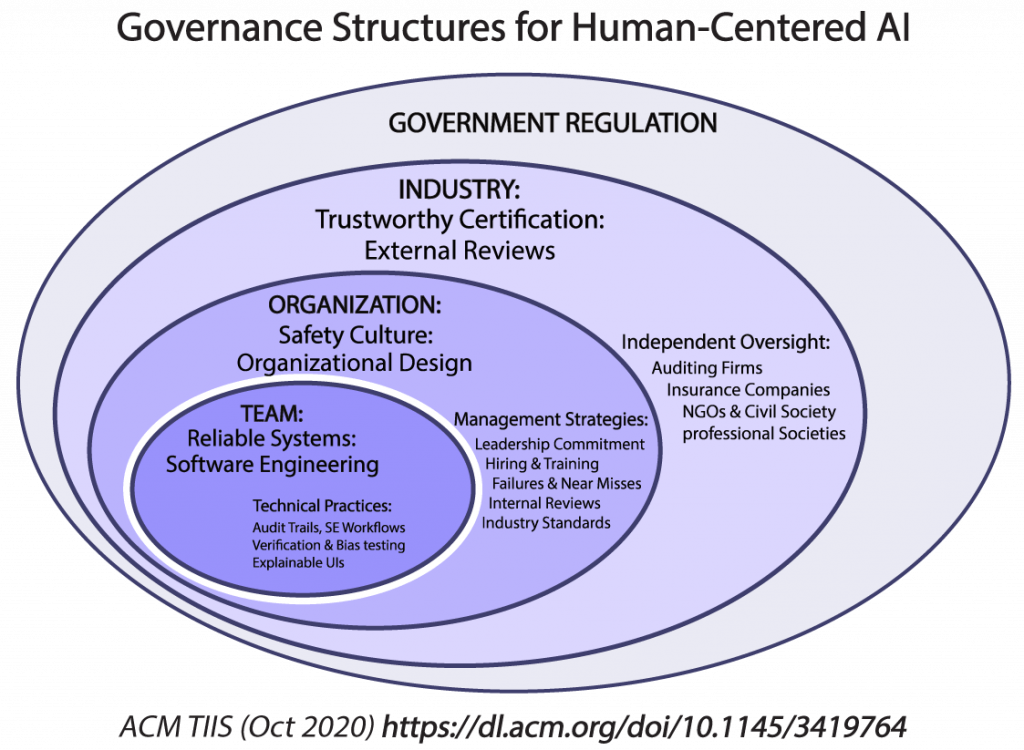

While autopilot is an established technology, most of us would probably not have enough trust and confidence to board a commercial airliner with only an AI system and no pilots or crew aboard. Shneiderman believes, “It’s a cliche that any regulation limits innovation, but that’s really not true, government regulation of automobile safety and efficiency triggered enormous innovations and really led to better cars.

Ultimately the auto industry embraced those rules because it is what their customers wanted.” Similarly, he noted, the European GDPR triggered an outpouring of tens of thousands of papers about “explainability,” which means ensuring that the reasons for AI/ML decisions are clearly explained to audiences with varying degrees of technical knowledge. The explanation could, for example, give them guidance about what they need to do to for loan approval. The GDPR is not going to tell people exactly how to implement explainability goals but expects them to determine the best approach to achieve that for themselves.

Government can help advance some goals, and industry can figure out how to accomplish them. This visual suggests one way this might be organized.

Bridging the Gap Between Ethics and Practice: Guidelines for Reliable, Safe, and Trustworthy Human-centered AI Systems https://dl.acm.org/doi/10.1145/3419764, Author: Ben Shneiderman

Conclusion

Although there will be vigorous debate and discussion about this topic, developing thoughtful HCAI systems should lead to better outcomes for people now and in the future. The U.S. government is well-positioned to benefit from and help shape that future.

Hunter Whitney is a senior human-centered design (HCD) strategist, instructor, and author who brings a distinct UX design perspective to data visualization and analytics. Hunter currently working at eSimplicity as a Principal HCD Strategist and has advised corporations, startups, government agencies and NGOs to help them achieve their goals through a thoughtful, strategic design approach to digital products and services.

Leave a Reply

You must be logged in to post a comment.