As we approach the 151 anniversary of Lincoln’s Gettysburg Address[1], certainly one of the most significant orations ever delivered, we do well to remember the closing; the very end of the last of the ten simple and deeply moving sentences that make up the Address.

In these few, memorable words, a war-weary Lincoln charges all of us with ensuring that “government of the people, by the people, for the people, shall not perish from the earth”.[2]

Most would agree that our government should reflect our national character and values, and serve us in accordance with our expressed needs.

So how does government get to know just who we the people really are, and what we expect of our government? Traditionally, through elections; through town halls; and through direct communications from constituents. But as technology and the pace of communications have evolved, are these traditional methods enough? Should the government be paying attention to all of our public utterances? Can the government even do that?

Note the emphasis on public utterances. This is not about whether or not the government does or should be listening in on any private communications. Rather let’s consider what people are saying when they don’t care who hears them; when they would like to be heard as widely as possible. In 1863, this would have been by publishing letters to the editor, or editorials; or by soapbox speeches in public places. In 2014, it’s by social media: by Facebook posts, YouTube videos, Twitter tweets, and more.[3]

So how can the government listen to what people are saying, out loud, in public, on these social channels?

It’s actually quite easy. Businesses have been doing it for years, both as a way of understanding how the public perceives their brands and products and services, and as a way to develop and position new products and services to meet evolving consumer interests.[4]

Today, each of the leading social media platforms publishes open, freely accessible application programming interfaces (APIs) that can be used to scan the content of public contributions based on content, sentiment, geographic origin, or any combination of these or other attributes.

For example, consider Twitter. At present, Twitter has 271 million active users (about 62,000,000 in the U.S.) sending 500 million Tweets per day. Many of us know that a Tweet can carry a message of up to 140 characters, but the actual size of a Tweet object can be ten or twelve times that size, considering all of the metadata that gets carried along with it.[5] This metadata provides information about the sender, her geographic location, the date and time the Tweet was sent, whether the Tweet has been “favorited” or “retweeted”, whether the Tweet contains entities such as URLs or images, and much more.

Note that the free Twitter API allows us to tap into a 1% random sample of all Tweets. Tapping the full Twitter “firehose” requires special permissions, and is very expensive to implement both in terms of fees, as well as in terms of the hardware, software and network bandwidth needed to consume the full volume of Tweets in a meaningful way. Nevertheless, and as Twitter points out, “[f]ew applications require this level of access. Creative use of a combination of other resources and various access levels can satisfy nearly every application use case.”[6] And as every student of Statistics 101 understands, proper random samples will provide information that very closely mirrors that which is contained within a full universe of data points.

So what’s required to capture and analyze the content of Tweets? Not very much. A Twitter developer account (free). A few lines of Python, or PHP, or JavaScript. You can even capture Tweets with a command line utility (cURL).

You can capture Tweets that contain certain keywords (with or without “hashtags”), from specific geographic areas, that reference certain URLs or domains, or that match any of the other metadata elements that travel with each Tweet.

Once you have them, you can perform “sentiment analysis”, to determine whether the Tweets you have captured are generally positive in mood, or negative; and to what degree. Again, this can be done with a few lines of Python or another scripting language.

For a truly compelling example of the use of Twitter data to create a visual map of public engagement, please see http://www.pbs.org/newshour/rundown/news-ferguson-spread-across-country-via-twitter/

So don’t let worries about the cost or complexity get in the way of tapping into what the public is saying that may be of interest to you!

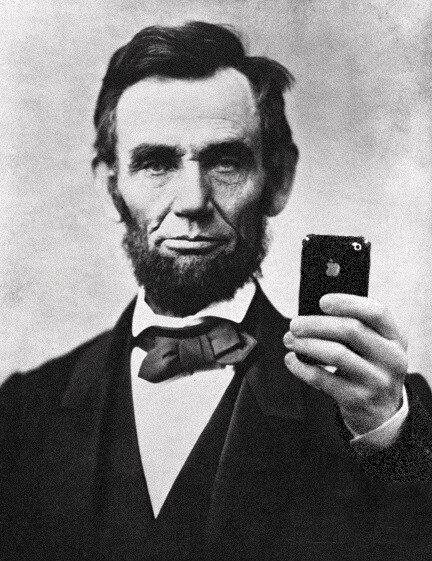

By the way, if Lincoln had Twitter, he could have shared the entire Gettysburg Address in 11 Tweets.

Jim Tyson – Word to the Wise

Jim Tyson www.linkedin.com/in/jimtyson1/ is an IT Senior Executive with over 30+ years of experience. He has a passion for human nature and Information Technology – working to understand the relationships between both to create productive environments.

Please share your comments and thoughts below and tweet them to@JimT_SMDI.

[1] Delivered on November 19, 1863

[2] Of course none of us were there, yet Lincoln’s powerful words surely spoke to future generations as well as those who were present.

[3] According to Statista, as of September 2014, the most popular social media Web sites by percent share of total visits were Facebook (58.88%), YouTube (18.86%), Google+ (2.74%), Twitter (2.64%), Yahoo! Answers (1.58%), LinkedIn (1.1%), Pinterest (1.07%), Instagram (0.8%), Tumblr (0.67%), and Reddit (0.64%). http://www.statista.com/statistics/265773/market-share-of-the-most-popular-social-media-websites-in-the-us/.

How cool! We often think of Twitter as a way to get a government message out… rarely the other way around. This is a good reminder to think about what the public is saying as well. Thanks for writing about it!