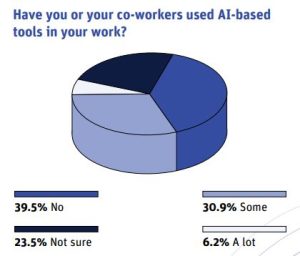

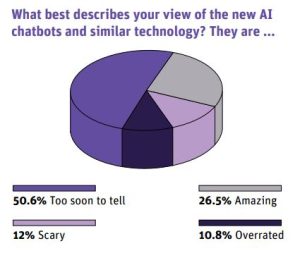

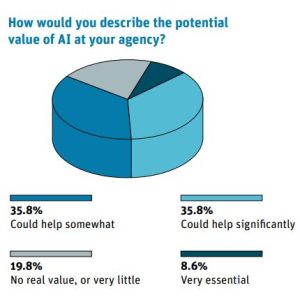

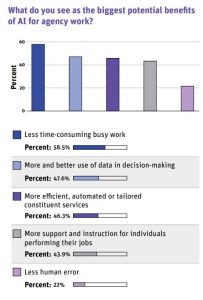

We asked readers of our Daily Awesome newsletter and attendees at a recent event on AI literacy for their thoughts about AI. Fewer than half of respondents said they currently use AI in their work, but about 80% believe it could help their agency in the future. Respondents have mixed feelings about AI chatbots and similar technology, with more than half saying it’s too soon to tell. But it seems that at present we trust humans more than we do AI.

In-Depth Perspectives

We also asked respondents to share more detailed replies to a few questions.

What’s your AI experience so far?

“I’ve used it for generating potential interview questions for a position and devising a scoring rubric for that interview. (It did a pretty good job!) I’ve fed it information about my agency and then asked what sort of general competency framework may be helpful to develop.… I used it to generate a training outline on a specific topic. I’ve ‘role played’ with it (prompted it to take on a certain characteristic or role). I’ve asked it to write silly work poems. I used it to ask if a script I wanted to run on my home computer was malicious. Mostly, I’ve used it for brainstorming new ideas.”

“As a person with a disability outside of the norm, it makes me uncomfortable.”

“I don’t have any experience as a developer, but as a regular person walking down the street, I love it, and I’m so excited for what it could be, but at the same time, I’m concerned about the possible implications and risks.”

How could AI be useful at your agency?

“If the rumors are true, it would be useful for everyone and everything: design, writing, coding, customer support, organizing, etc.”

“Creating rough drafts that can then be edited for use.”

“Grant review, data review.”

“Planning, budgeting and execution of resources.”

“IT: being able to help with actual app dev training, being able to propose training outlines, knowledge-check questions, debug eLearning code.… There’s so much potential for these tools.”

“Organizing data, perhaps faster identification of resource data.”

What would make agencies more likely to use AI?

“It’s pretty usable now, I would say. My only fear is that more conservative agencies won’t believe that the benefits outweigh any of the risks and would rather ban it outright than come up with and enforce reasonable guidelines on how to use it effectively without violating policies or laws.”

“They still need to be viewed as computers and not metal humans.”

“Security, privacy, regulations and risk management should be priorities and must be in place before it’s implemented.”

“There needs to be clear standards around discriminatory conduct that evidence already shows is baked into many AI tools.”

“Guidance and regulations needed. Potential risks need to be understood and corrected if possible. AI is still not understood by humans, so how do they know what to feed it?”

“We need to talk about it in terms of assistance for writing drafts and create policies and agency cultures that reflect a deep understanding of the need for meaningful human review and editing. It’s an aide…and it’s not a fix-all solution to understaffing and workload issues.”

This article appears in our new guide “AI: A Crash Course.” To read more about how AI can (and will) change your work, download it here: